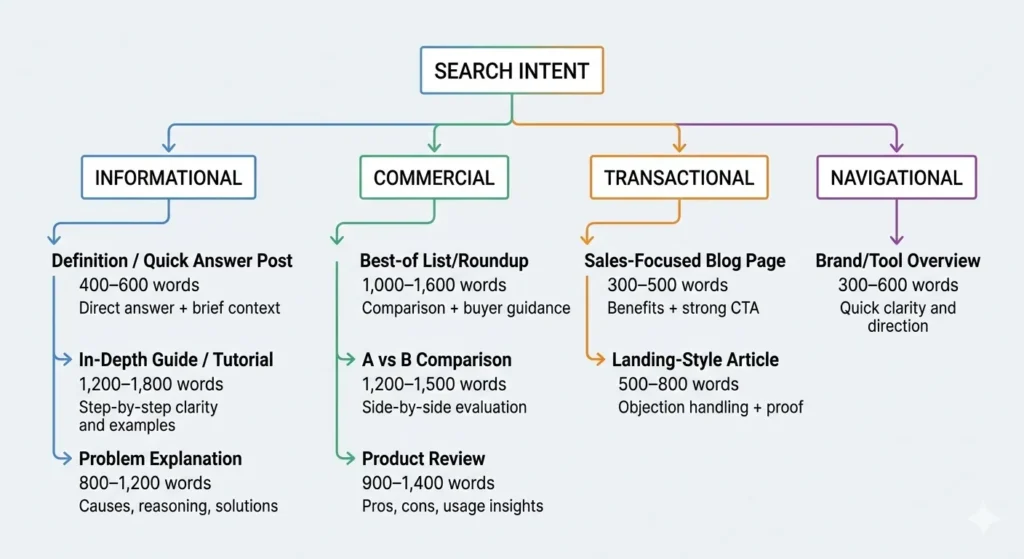

How to Decide Blog Post Length Based on Search Intent?

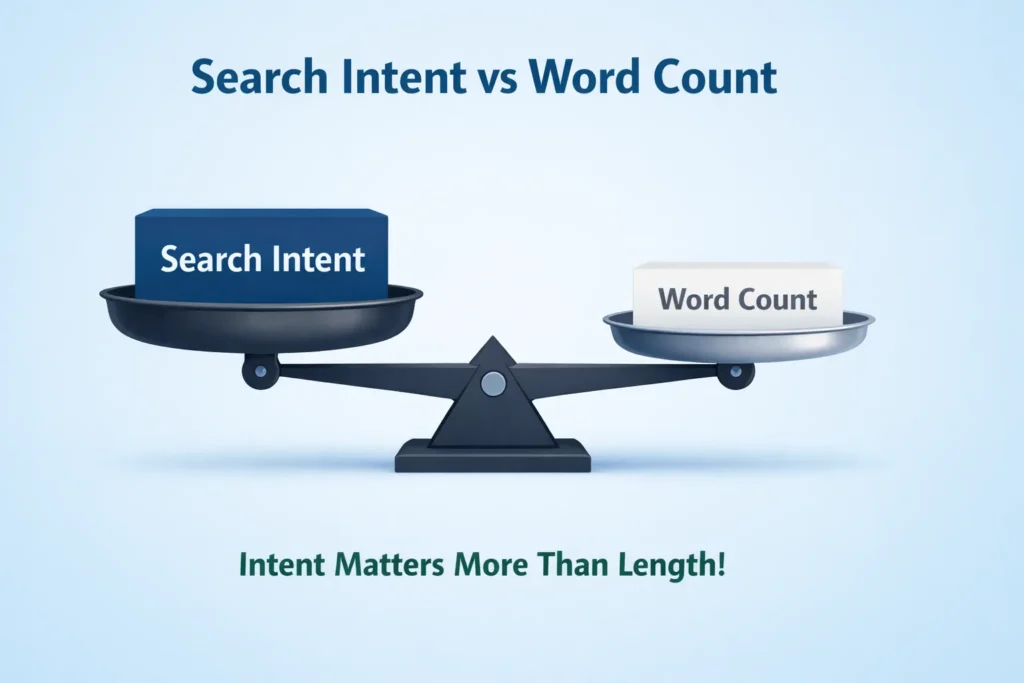

How to Decide Blog Post Length Based on Search Intent? Word count isn’t a goal. It’s a byproduct. The real driver is intent. Once you understand what the…

Decode this →Content Decoded analyzes how Google's algorithms interpret quality, trust, intent, and usefulness — moving beyond SEO tactics to understand the systems themselves.

Dive into analyses, trust signals, policies, and more — all structured to help you decode search evaluation systems.

Fresh breakdowns from how search systems think, judge, and reward content.

Search Intent & SERP Interpretation

Search Intent & SERP Interpretation

How to Decide Blog Post Length Based on Search Intent? Word count isn’t a goal. It’s a byproduct. The real driver is intent. Once you understand what the…

Decode this → Helpful Content & Quality Systems

Helpful Content & Quality Systems

Why Thin Content Is Different from Short Content? Short does not mean thin. That confusion ruins a lot of good writing. Think espresso versus a bag of chips.…

Decode this → Search Intent & SERP Interpretation

Search Intent & SERP Interpretation

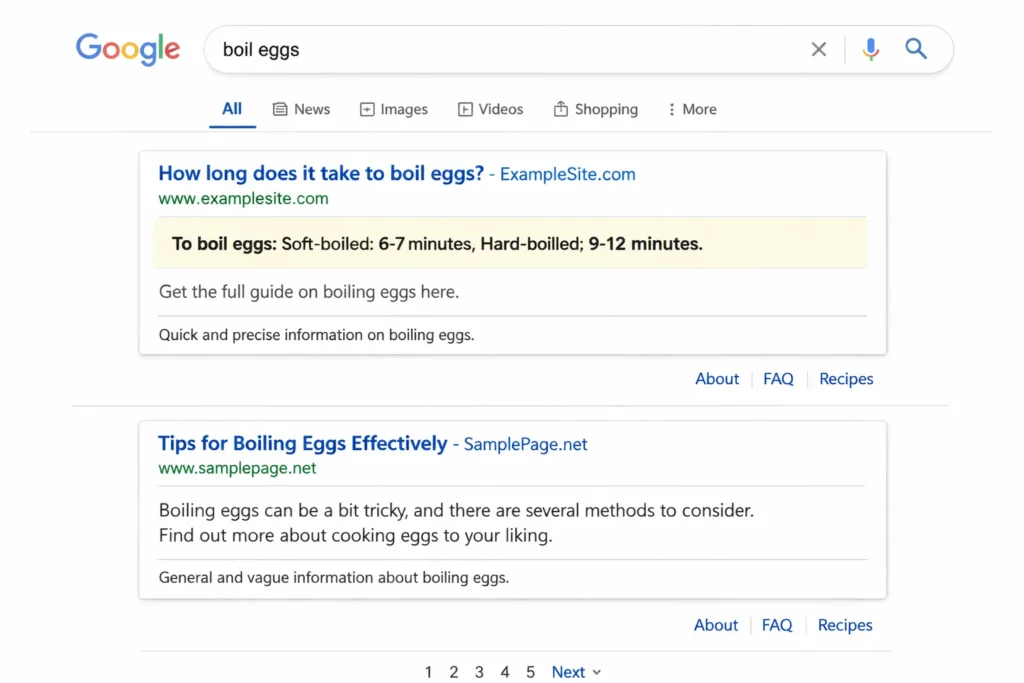

Why matching competitor word count is a mistake (new blog) Most creators open top-ranking pages, scroll straight to the bottom, note the word count, and treat it like…

Decode this → Helpful Content & Quality Systems

Helpful Content & Quality Systems

How long should a blog post be for SEO? Most blog posts that rank on page one sit somewhere between 1,200 and 2,000 words. That’s the practical range…

Decode this → E-E-A-T & Trust Interpretation

E-E-A-T & Trust Interpretation

How trust signals work across multiple pages Imagine publishing one excellent article. It’s detailed, well-structured, and genuinely helpful. Then you publish five more that are thin, vague, or…

Decode this →Decoding the gap between SEO advice and algorithmic reality

Hi, I'm Yash Gupta. Most SEO advice focuses on tactics: "Do this, rank higher." But Google's systems don't evaluate tactics — they evaluate content quality, trustworthiness, and usefulness through complex, interconnected systems.

This site exists to bridge that gap: moving from "what to do" to "how systems think".

Why 800-word pages sometimes beat 4,000-word guides under Helpful Content Systems.

Why credentials don't automatically build trust, and how Google evaluates expertise contextually.

Why sites can follow every guideline and still lose traffic — and how policy updates get misread.

How Google sometimes rewards "incomplete" answers and why matching intent doesn't guarantee rankings.

Why perfect formatting doesn't help if content lacks substance, and how hierarchy shapes evaluation.

Why AI content passes checks but fails rankings, and how automation weakens site-wide trust signals.

Understanding how Google's evaluation systems (Helpful Content, E-E-A-T, Quality Raters) actually work together, not just what they say individually.

Pinpointing where common SEO advice diverges from algorithmic reality — and why those gaps persist.

Looking beyond individual case studies to identify system-wide patterns in how content is evaluated.

Translating system understanding into actionable insights that work within Google's actual evaluation frameworks.

"SEO isn't about gaming algorithms — it's about understanding how systems evaluate quality, then creating content that naturally satisfies those criteria."

Applying system-level understanding to real-world content and SEO challenges

Content that works within search evaluation systems

I apply the same system-level analysis from this blog to create content that naturally satisfies Google's evaluation criteria — not just follows surface-level SEO rules.

Local expertise with global system understanding

Based in Firozabad but serving clients globally. I combine local market understanding with deep knowledge of how search systems evaluate quality at scale.

Both services apply the same system-level analysis discussed in this blog's articles.