How Google Assigns Trust at the Domain Level?

If two pages of different websites answer the same query equally well, why does one dominate while the other disappears? The difference rarely lives on the page itself. It lives in the domain behind it.

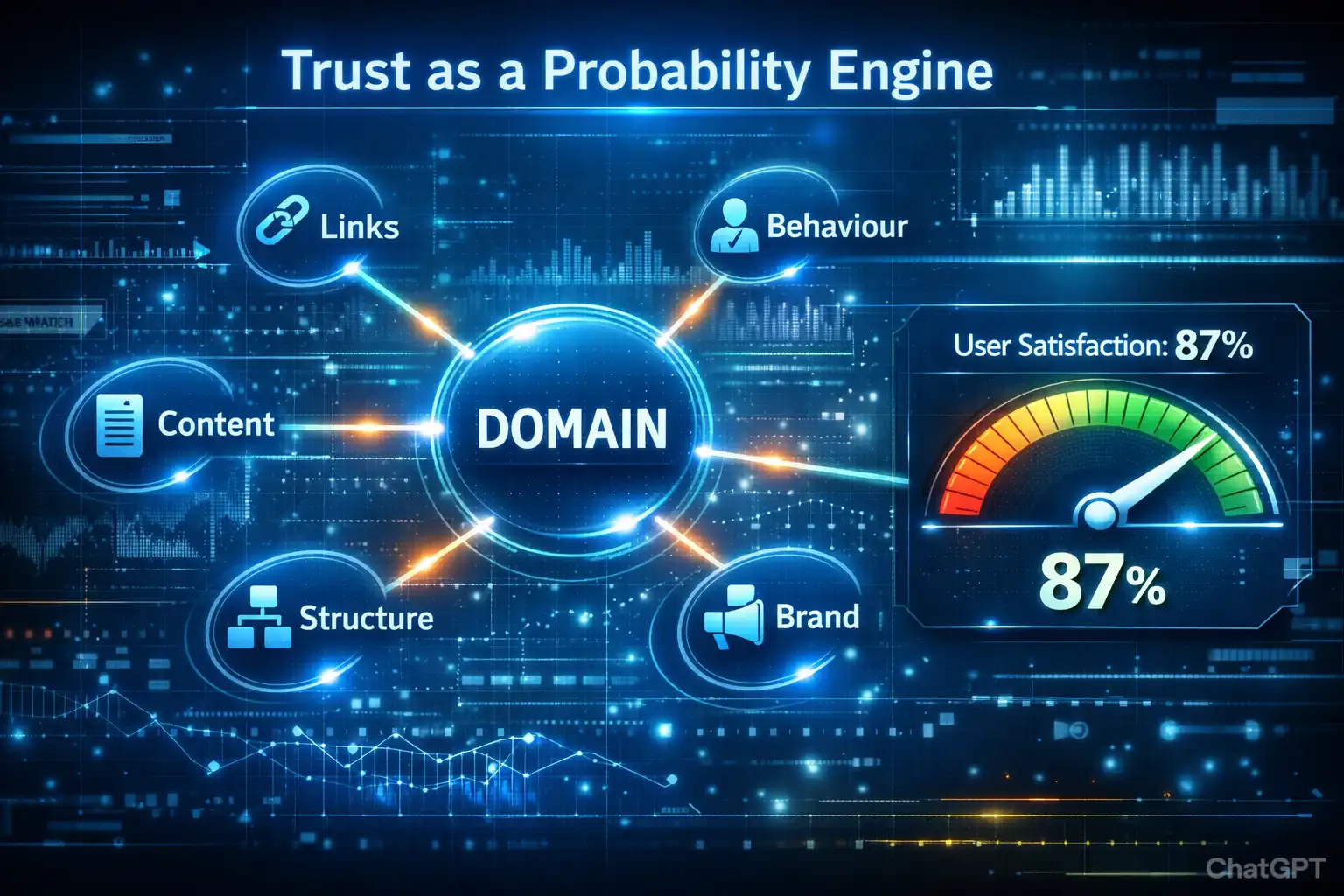

Google does not judge URLs in isolation. It studies patterns across an entire site and builds predictive confidence over time. Trust, in this sense, is probability: the likelihood that users will leave satisfied. That probability is shaped by behavioural history, link neighbourhoods, topical consistency, structural stability, and brand signals working together. What this really means is simple: rankings are not awarded to pages alone. They are extended to domains that have earned systemic credibility.

📌 TL;DR — No Time? Read This.

Google doesn’t rank pages in isolation. It ranks its confidence in the domain behind them.

That confidence comes from patterns. Behavioural history, topical depth, link relationships, technical stability, brand signals, and time all stack together. One strong article can rank for a while, but it can’t override a weak system. If most of a site is thin, scattered, or unstable, that pattern drags everything down.

Trust works like probability. Each time your page appears in search, Google is asking: if we show this domain again, will users feel satisfied? Consistent engagement, steady publishing, clean architecture, and focused topic clusters increase that probability. Sudden link spikes, scattered content, and neglected updates reduce it.

Backlinks matter, but context and relevance matter more. Domain age helps only when paired with performance. Removing low-quality pages can strengthen signal clarity, but only if done strategically.

What this really means is simple: rankings are not rewards for effort. They’re predictions of reliability. Build a stable, focused, well-maintained ecosystem, and trust compounds. Chase shortcuts, and volatility follows.

Google Is Not Ranking Pages. It’s Ranking Predictability

Here’s the thing: trust isn’t a visible score sitting in a dashboard somewhere. It’s accumulated consistency. Google doesn’t reward a single impressive page. It rewards patterns. If your site repeatedly satisfies search intent, stays topically coherent, and behaves reliably, it becomes predictable. And predictable systems are safer to rank.

Why “Domain Authority” Isn’t a Real Metric — But the Effect Is Real

Google has said many times it doesn’t use the third-party metric popularised by tools like Moz. There’s no official public score attached to your domain.

But domain-level reputation absolutely influences rankings.

What this really means is:

- It’s not a visible number you can optimise toward.

- It’s not a universal scale shared across the web.

- It behaves like internal confidence modelling built from patterns.

Google doesn’t rank based on a badge. It observes repeated signals across your site and forms expectations.

Trust as a Probability Model

Imagine a librarian deciding which author to recommend. If readers consistently return satisfied, the librarian gains confidence.

Google works similarly.

Each time your domain ranks, it’s essentially asking: If we show this again, will users feel it was worth the click? Trust becomes a probability calculation. The lower the perceived risk, the higher the ranking stability.

Why One Viral Post Cannot Lift a Weak Domain

A single strong URL can perform well. But it can’t rewrite site history.

| Scenario | Outcome |

|---|---|

| Strong page + strong domain | Sustained rankings |

| Strong page + weak domain | Temporary lift, unstable position |

Systemic strength wins. If most of your site shows thin content, inconsistent structure, or weak engagement, one viral article won’t override that pattern. Google evaluates the whole ecosystem, not just a moment of success.

This is why focusing only on surface-level improvements rarely works. Many site owners redesign their homepage instead of strengthening the entire domain. But as explained in this detailed guide on why a hero page can’t fix a weak website, structural weakness eventually affects rankings.

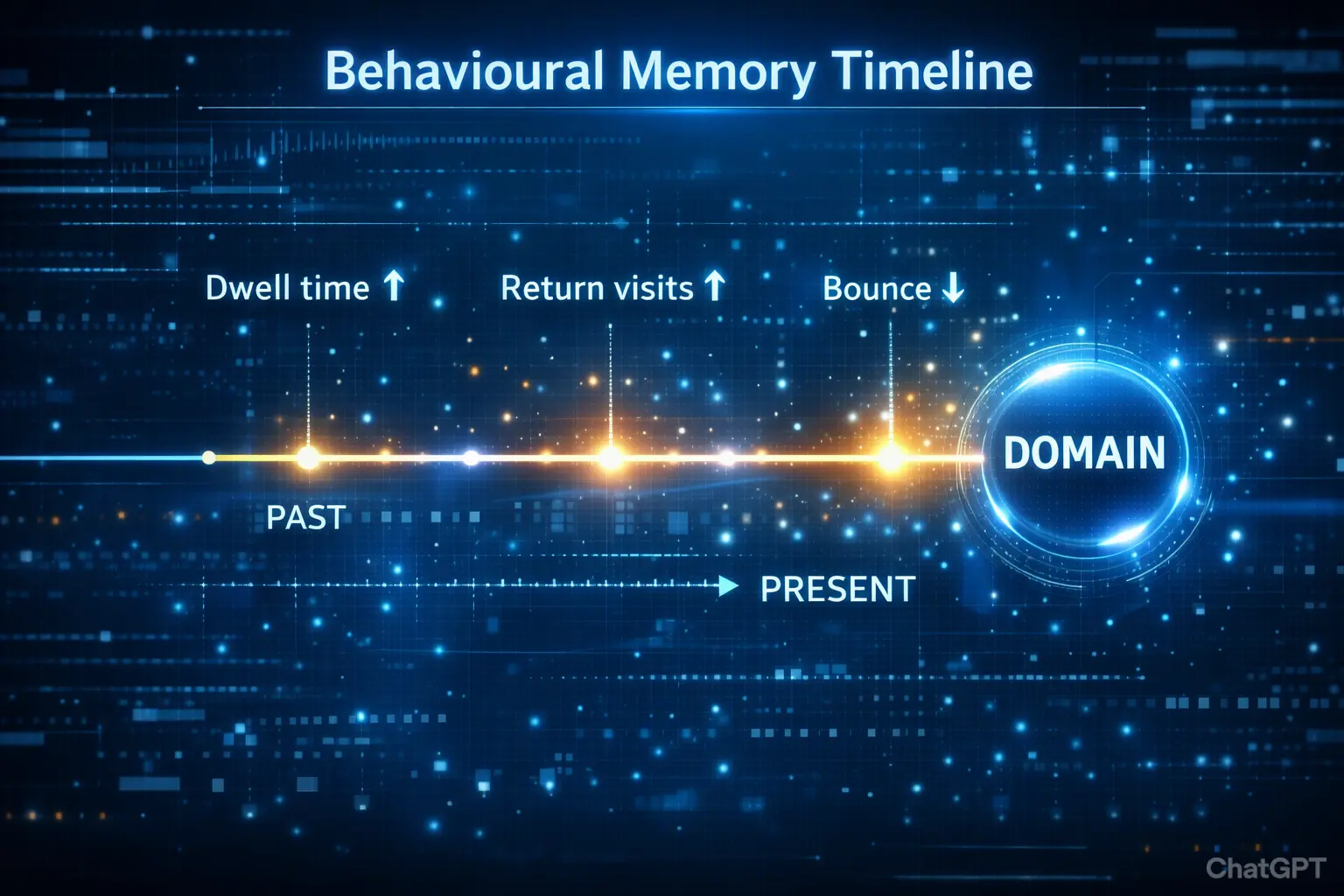

Historical Performance: Your Domain Has a Behavioural Memory

Past performance shapes future visibility. Search engines don’t treat every page as a fresh start. They track how users respond over time. Your domain builds a reputation based on patterns, not promises. What this really means is simple: behaviour compounds.

Engagement Patterns Google Watches Quietly

Behind the scenes, user behaviour tells a story. Not loudly. But consistently.

- Dwell time – How long users stay before returning to search results. Longer often signals depth and relevance.

- Pogo-sticking – Quick back-and-forth between results suggests the answer wasn’t satisfying.

- Return visits – When users come back directly, it signals trust and usefulness.

- Query refinement – If users stop refining searches after visiting your page, you likely delivered the final answer.

- Scroll depth and interaction – Engagement with content elements hints at genuine interest.

The pattern matters more than any single visit.

The Update Pattern: Do You Maintain or Abandon Content?

Some domains publish and forget. Others maintain and refine. Search engines notice the difference. Updating outdated information, improving clarity, and keeping links healthy signals ongoing responsibility.

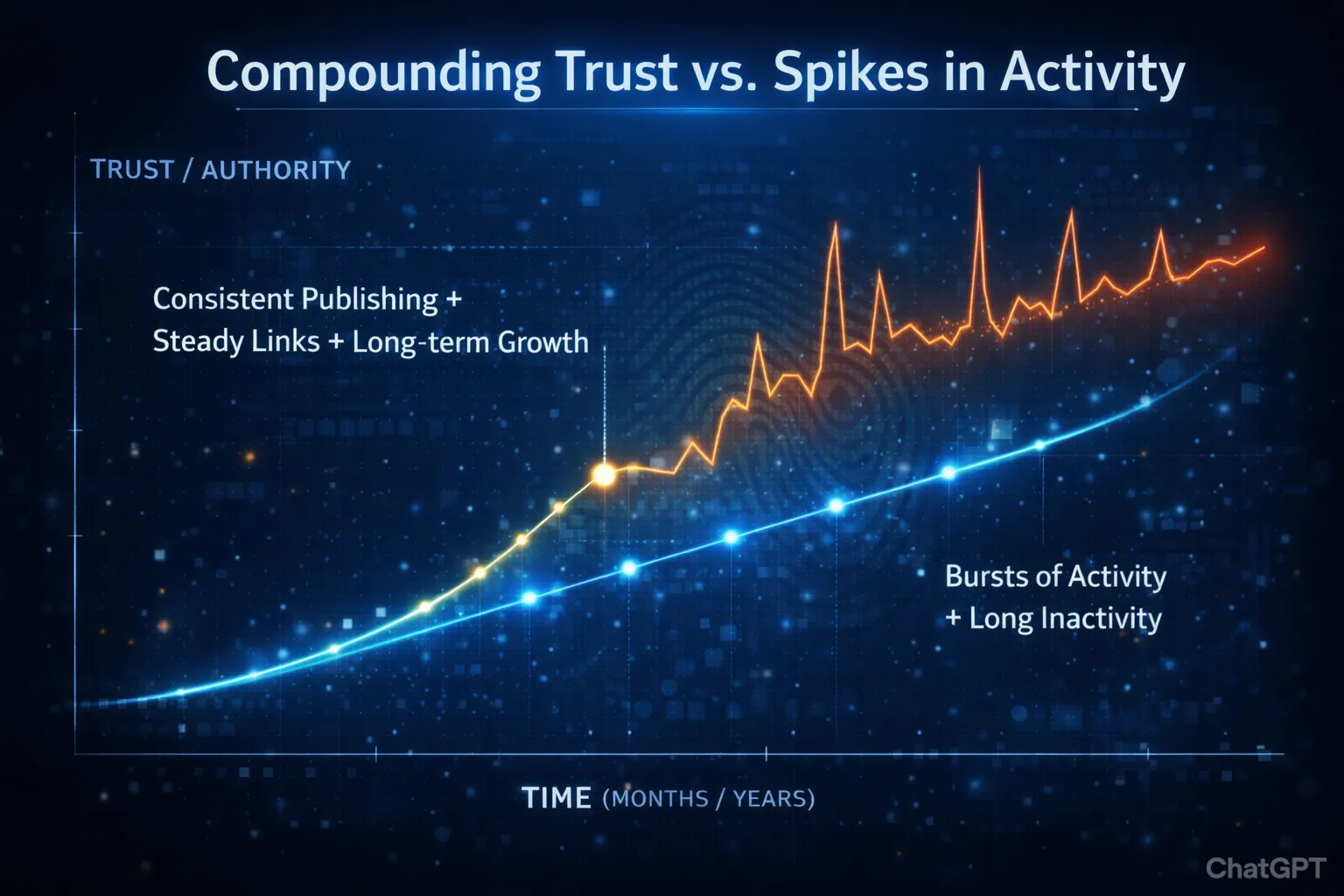

Consistency in publishing rhythm also builds reliability. Sporadic bursts followed by silence feel unstable.

Micro-checklist:

- Refresh outdated statistics

- Improve thin sections

- Fix broken links

- Add internal connections

- Maintain steady publishing intervals

Recovery Speed After Core Updates

When major algorithm updates roll out, established domains often recover faster. Why? Because they already have a behavioural history of satisfying users. That baseline trust cushions volatility.

Long-term stability beats short-term spikes every time.

The Link Graph: Trust Flows Through Relationships

Links aren’t votes. They’re relationships inside a living network. Google doesn’t just count how many sites point to you. It studies who is connected to whom, how often, and in what context. What this really means is trust moves through pathways, not raw numbers.

The Seed Site Theory (Why Distance Matters)

Here’s the basic idea:

- Google identifies a group of highly trusted websites.

- These become reference points, often called seed sites.

- Sites directly linked from them inherit stronger trust.

- As links move further away from these seeds, trust weakens.

Think of it like circles expanding outward. The closer you are to a reliable source, the stronger the signal. The farther you drift into loosely connected territory, the more diluted that trust becomes.

Topical Relevance Beats Raw Volume

A handful of tightly related links often outperforms dozens of unrelated ones.

| Relevant Niche Links | Random High-Authority Links |

|---|---|

| Same industry | Unrelated industries |

| Contextual mentions | Sidebar or footer links |

| Consistent theme | Scattered topics |

| Stronger trust flow | Weak contextual trust |

Trust is contextual. Alignment matters more than metrics.

Manipulative Patterns Google Detects

Google evaluates patterns, not isolated links.

Red Flags:

- Sudden unnatural link spikes

- Obvious reciprocal link rings

- Clusters of paid or sponsored footprints

- Identical anchor text repetition

It’s the pattern that exposes intent, not a single backlink.

Topical Depth: Staying in One Lane Builds Authority

General trust is a myth. Search systems don’t trust websites in a broad, emotional way. They evaluate patterns inside specific subject areas. What this really means is simple: authority is earned per topic, not per domain name.

Cluster Coverage vs Scattered Publishing

A focused content hub builds layered understanding. A scattered blog weakens it.

Think of it like this:

Core topic → Subtopics → Supporting questions → Case examples → Updates and comparisons

That structure creates reinforcement. Each piece supports the others.

A strong cluster usually includes:

- A comprehensive pillar guide

- Supporting deep-dive articles

- FAQs targeting long-tail intent

- Case studies or practical breakdowns

- Consistent internal links connecting everything

When you publish about marketing, then crypto, then health supplements, then travel hacks, you reset topical signals every time. The algorithm sees curiosity, not expertise.

Internal Linking as Context Reinforcement

Internal links are not navigation tools. They’re context signals.

When your technical SEO guide links to crawl budget, indexing, and log file analysis articles, you’re defining a clear expertise neighbourhood.

Example: A pillar on email marketing links to segmentation strategy and deliverability optimisation. Now the system understands the depth of that lane.

When Topic Drift Kills Domain Confidence

Before: A site publishes 40 articles on SaaS growth. Clear focus. Strong interlinking.

After: It suddenly publishes casino reviews and celebrity gossip.

That shift signals instability. Depth creates authority. Drift erodes it.

Site-Wide Quality Patterns: Google Sees the Whole System

One strong article cannot cover cracks in the foundation. Search engines don’t judge pages in isolation. They look at the domain as a system. Patterns matter more than exceptions. If most pages feel thin, inconsistent, or generic, that signal bleeds into the few pages that are actually strong. What this really means is simple: domain-level trust is earned collectively, not individually.

Thin Page Ratios Across Categories

Think in ratios, not individual URLs. Imagine a site with 1,000 indexed pages. If 650 are short, repetitive, or lightly reworded summaries, and only 350 offer real depth, that imbalance becomes visible. Even if those 350 are solid, the overall quality percentage sits at 35 per cent.

Now flip it. If 80 per cent of pages show depth, original thinking, and structural clarity, the system reads differently. Categories with high thin-page concentration send a quiet but persistent signal: this site produces filler at scale.

AI-Generated Clusters Without Human Signals

Automation alone is not the problem. Absence of human proof is.

Ask yourself:

- Does the content include firsthand examples?

- Are there original screenshots, data, or experiments?

- Is there a clear point of view?

- Are claims supported with a specific context?

No human fingerprint means no defensible authority.

Bounce Patterns Across the Domain

If most URLs show quick exits, short dwell time, and low interaction, that pattern compounds. It suggests expectation mismatch across the domain.

When dissatisfaction becomes consistent, rankings follow the pattern.

Structural Stability: Technical Signals of Trust

Trust is structural as much as editorial. Strong writing matters, but search engines also look at how reliably a site is built and maintained. Clean systems signal seriousness. Messy systems signal risk.

Crawl Efficiency and Clean Architecture

When bots land on your domain, they follow the structure before they read the meaning. If they struggle to move, trust drops.

Technical signals to check:

- Shallow crawl depth for key pages

- Logical hierarchy from homepage to subtopics

- Clear internal linking between related content

- XML sitemap that reflects live URLs only

- No orphan pages

- Minimal redirect chains

- Consistent canonical tags

Architecture should feel predictable. Every page must have a defined place in the ecosystem.

HTTPS, Mobile Usability, and Core Web Vitals

Security and usability are measurable. HTTPS encryption, mobile responsiveness, and performance metrics within the Google Core Web Vitals framework show operational stability.

Quick audit steps:

- Confirm a valid SSL certificate

- Test mobile layout across devices

- Review Largest Contentful Paint, CLS, and INP

- Remove intrusive interstitials

Consistency in URL and Site Behaviour

Frequent structural overhauls create instability. Constant URL changes, shifting navigation, and unpredictable layouts send mixed signals.

Stable domains evolve gradually. They improve without erasing their history. That continuity builds long-term algorithmic confidence.

For deeper official context, see Google’s rater guidelines on E‑E‑A‑T →

Brand Signals: Trust Beyond SEO

A trusted domain leaves footprints far beyond its own pages. Rankings are influenced not just by what sits on your site, but by how the wider web reacts to it. When people talk about you, search for you, and recognise you, that’s trust in motion.

Branded Search Volume as Confidence Indicator

When users type your domain name directly into a search engine, it signals awareness and belief. They are not browsing randomly. They are looking for you.

Picture this: someone hears about a marketing blog from a friend. Instead of searching “best SEO tips,” they search the blog’s name itself. That action reflects familiarity. Repeated at scale, it becomes a measurable trust signal. Search systems interpret this as demand driven by reputation, not accident.

Mentions Without Links Still Matter

Links help, but simple mentions count too. Modern systems understand entities and relationships, not just hyperlinks.

Common mention sources include:

- Forum discussions

- News articles

- Social media threads

- Community Q&A platforms

- Industry roundups

Consistent references across these spaces reinforce authority through co-citation patterns.

Clear Identity and Author Transparency

Trust strengthens when identity is obvious and consistent.

Quick checklist:

- Real author names

- Detailed About page

- Visible contact information

- Consistent tone and expertise

- Author bios tied to credentials

What this really means is simple: credibility grows when people can see who stands behind the content.

Time: The Compounding Layer of Trust

Trust compounds slowly and collapses quickly. Search systems don’t just look at what you publish today. They observe patterns over time. What this really means is simple: history leaves fingerprints.

Publishing Rhythm Over Years

Imagine a site that publishes twice a week for five straight years. No long disappearances. No frantic bursts. Just steady output. That rhythm signals operational stability. It suggests real processes, real people, real intent. Compare that with a domain that posts 40 articles in one month and then goes silent for six. The timeline tells a story before the content is even evaluated. Consistency becomes a quiet credibility layer that machines can measure.

Gradual Link Growth vs Sudden Spikes

Natural growth looks like this:

- Links accumulate steadily as visibility expands.

- Mentions rise alongside content depth and reach.

Artificial growth often looks like this:

- Sharp, unnatural link bursts.

- High-volume acquisition disconnected from publishing history.

Spikes trigger scrutiny. Gradual velocity builds confidence.

Domain Ageing Into Authority

Domains that maintain clean histories and stable ownership tend to earn baseline trust over time. They have fewer volatility signals and more historical context.

Time doesn’t guarantee authority, but stable longevity strengthens its foundation.

Trust Signal Audit

| The Trust Killer | The 2026 Fix |

|---|---|

| High Bounce Rate | Add a clear answer at the top. |

| Anonymous Authors | Show real names and credentials. |

| Stale Content | Update stats and examples quarterly. |

| Thin Content at Scale | Merge or expand weak pages. |

| Topic Drift | Stick to 1–2 core clusters. |

| Sudden Link Spikes | Earn links steadily, not in bursts. |

| Over-Optimised Anchors | Use natural, varied anchor text. |

| Poor Internal Linking | Build strong pillar + cluster links. |

| Inconsistent Publishing | Publish on a fixed schedule. |

| Weak Engagement Signals | Improve structure and clarity. |

| Broken Links & Redirects | Audit and fix monthly. |

| Slow Page Speed | Optimise Core Web Vitals. |

| Mobile Issues | Ensure a clean, responsive layout. |

| No Branded Searches | Build brand demand outside SEO. |

| No Firsthand Insight | Add real examples and proof. |

| Unreviewed AI Content | Add human editing before publishing. |

| Too Many Low-Value Pages | Prune or consolidate strategically. |

| Frequent URL Changes | Keep the architecture stable. |

What I’ve Seen in Real Projects?

After working across different domains and watching multiple updates roll through, patterns stop feeling theoretical. They start repeating. That’s where assumptions fade, and structure becomes visible.

What looked strong but failed:

I once worked on a technically perfect page. Clean markup, fast load time, strong on-page optimisation. It didn’t rank. The domain itself lacked topical depth. One strong page couldn’t compensate for a shallow ecosystem.

What changed when I fixed the pattern:

Instead of tweaking the page again, I expanded the surrounding cluster. Supporting articles, tighter internal links, clearer hierarchy. Rankings moved only after the topic felt complete.

What looked strong but failed:

On another site, traffic stagnated despite constant publishing. Nearly 80 thin URLs diluted the signal. Everything looked active, but nothing felt authoritative.

What changed when I fixed the pattern:

I removed and consolidated weak pages. In one cleanup project, removing 30% low-value pages led to stronger rankings on the remaining cluster within two update cycles. Publishing cadence also became consistent, and recovery after updates was noticeably faster.

Trust isn’t fragile at the page level. It’s fragile at the system level.

That’s why content writing services need to think in ecosystems, not standalone articles.

The Final Insight: Google Ranks Confidence, Not Content

Here’s the core truth. Google isn’t handing out trophies for well-written articles. It’s predicting satisfaction before the click ever happens. Rankings are not rewards for effort; they’re bets placed on which domains are most likely to deliver a reliable outcome.

What this really means is that a single polished URL can’t carry the weight alone. Behavioural memory, topical depth, link relationships, structural stability, and brand signals all stack together. Over time, patterns form. If users consistently engage, stay, explore, and return, that history becomes a signal. If a site covers its topic thoroughly across dozens of pages, earns references naturally, and maintains technical consistency, that pattern builds strength. Google isn’t asking whether one article is good. It’s asking whether the domain has proven itself repeatedly.

One standout post may spark attention. Sustained consistency builds trust. And trust compounds through deliberate patterns.

Google doesn’t rank pages. It ranks its confidence in the source behind them.

Local SEO example: SEO agency in Firozabad →

Frequently Asked Questions About Domain-Level Trust

Can a New Domain Ever Compete With Established Sites?

Yes, but not by copying them. Age alone isn’t dominance; consistency over time is. A tightly focused site that grows steadily and structures content with discipline can build trust faster than a scattered older domain chasing shortcuts.

Does Domain Age Automatically Increase Trust?

No. Time without performance means nothing. A domain sitting idle, publishing inconsistently, or accumulating weak signals does not earn automatic credibility. Trust compounds only when strong content patterns, user engagement, and clean link growth reinforce each other.

If I Delete Low-Quality Pages, Will Rankings Improve?

Sometimes, but only when done strategically. Removing weak URLs can sharpen overall signal clarity and improve quality ratios. Random mass deletion, however, can disrupt structure and internal equity. Think in terms of system balance, not emotional cleanup.

Can Strong Backlinks Override Weak Site Quality?

Backlinks help, but they don’t erase structural flaws. Authority can lift visibility temporarily, yet poor architecture and weak engagement limit long-term ceiling. Trust is layered. Links amplify what already exists; they rarely fix what’s broken.

How Long Does It Take to Build Domain-Level Trust?

There’s no fixed clock. What matters is publishing rhythm, topical coherence, and steady reinforcement. Trust grows through compounding patterns. Speed is unpredictable; disciplined consistency is not.

— trust compounds through deliberate patterns, not isolated brilliance —